Generative AI - explained like an ice cream

No one has likely missed the new cool kid in town—Generative AI—which has recently swept in like a storm. But how does it work and what do all the terms mean? It's easy to not only feel confused by all the buzzwords but also to give up on trying to understand...

But we won't do that! No, instead we'll try to talk in a way where the technology doesn't get in the way of overall understanding and curiosity. Whether I succeed remains to be seen, but for those who manage to get through all the theory, a reward awaits at the end 🍿.

Before we delve into the generative AI hype, let's first sort out some basic things.

Basic stuff

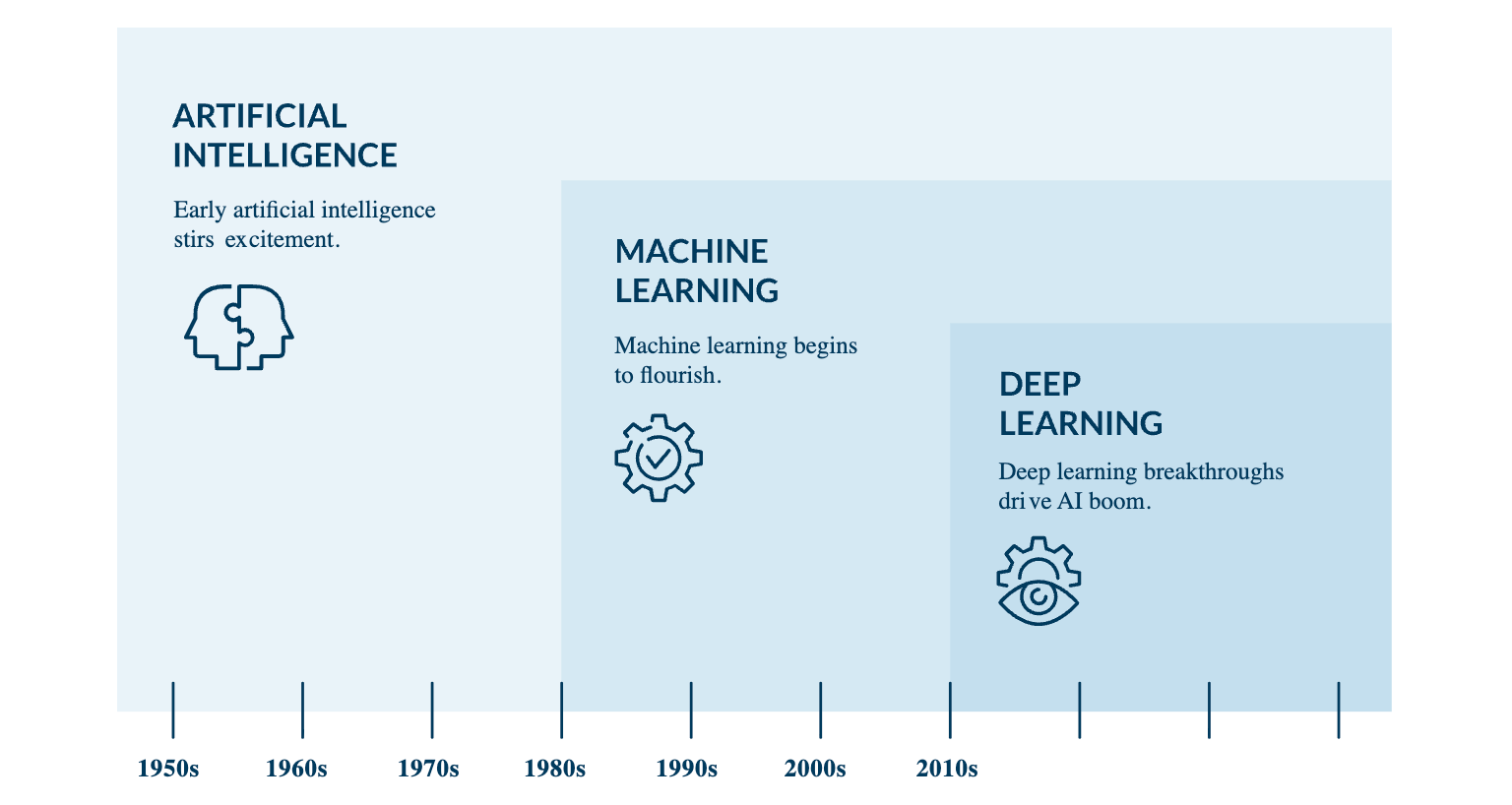

AI is far from something new. The idea of machine intelligence is considered to have existed since at least the 1940s, and the term 'artificial intelligence' was coined at a conference in 1956. But it's only in the most recent years that the technology has seriously begun to achieve great heights—both in terms of innovation and how high our eyebrows are raised in astonishment.

The term AI is today an umbrella term for various ways in which machines can perform tasks that normally require human intelligence.

However! When we in 2023 are amazed by AI's increasingly astonishing abilities, it is the more advanced subcategories of Deep Learning (DL) and Artificial Neural Networks that are behind it.

What then is Generative AI?

What's new for the past few years is that AI (with the help of deep learning & neural networks) has started to create truly impressive content. But not only that, it can also generate entirely unique content that hasn't existed before.

Before Generative AI, smart algorithms were mainly used for things like analysis, optimization, automation, and filtering. Now, the technology can also rapidly create things like:

- Text: Write texts, summarize books, and answer our questions. You've undoubtedly tried services like ChatGPT or Google Bard.

- Image: Generate creative and high-quality images. Midjourney, DALL·E and Stable Diffusion are some examples.

- Code: Generate programmed code using solutions like GitHub Copilot or Amazon CodeWhisperer.

Generative AI can also produce sound, compose music, transform 2D into 3D, produce films, and much more.

In all likelihood, the years 2022 and 2023 will go down in AI history as turning points where Generative AI changed the rules of the game when it comes to content creation. And in the long run, a lot of other things as well.

Great. Now we know what Generative AI is. Let's now indulge in some nerdy fun.

The Building Blocks of Generative AI

While preparing to write this article, I've tried to find an educational overview that puts the building blocks of Generative AI into some graspable perspective. That hasn't gone so well. For one, most are far too focused on tech details (and look like this or this). And secondly, they are often incredibly boring.

So, with the help of Midjourney and a simple design program, I created my own, slightly more creative explanation model based on ice cream (hey, who doesn't like dessert!). The rest of this text will now focus on the cone in the model. A cone that is the foundation for why all Generative AI is both functioning and completely exploding right now.

👋🏼 Say hello to Foundation Models, LLMs, Transformers and Diffusion Models.

Let's take them one at a time.

➡️ Foundation Models

Foundation Models are a crucial reason why Generative AI has exploded so quickly. Start by watching the video below (it's only two minutes long) to grasp what function these foundation models serve. The video comes from Stanford University Human-Centered AI and also discusses the opportunities and risks associated with these disruptive Foundation Models.

To train a Foundation Model, deep expertise in machine learning, mathematics, and statistics is required among other things. A large number of powerful computers, enormous amounts of data, and often a lot of money are also needed. It's not surprising then that many of the larger foundation models out there have been developed by well-known companies like Google, Meta, IBM, and OpenAI.

Anyone who wants to build their own Generative AI solution does not need to do the heavy lifting themselves, thanks to Foundation Models. Instead, one can make use of one or several of the hundreds of foundation models available and then tailor them according to how they want their own solution to function.

Beyond the Basics:

- Foundation models are trained using enormous amounts of data through self-supervised learning and/or semi-supervised learning.

- To get a Foundation Model to behave the way you want, there are different ways to customize the model. One method is through what is called Fine-Tuning (you 'fine-tune' an already trained model using new data that is adapted for a specific task), or through what is known as Prompt Tuning (a lighter form of customization where you direct the model's behavior through prompts).

- There are both foundation models that are controlled by companies (such as GPT-4 developed by OpenAI) and those that are open source (such as LLaMA2 developed by Meta & Microsoft). You can read more about the differences in the article Making foundation models accessible: The battle between closed and open source AI.

➡️ LLMs

LLM stands for Large Language Model and is a type of Foundation Model that deals with language and numbers. When you use ChatGPT or Google's BARD, it's an LLM that makes it work.

In the video below, Nikita Namjoshi and Dale Markowitz from Google explain what an LLM is.

Beyond the Basics:

If you want to impress a friend, you can drop the following:

- The latest LLM model that makes ChatGPT work is called GPT-4.

- Google's latest LLM model that, among other things, makes BARD work is named PaLM2.

- The LLM model that Meta and Microsoft have jointly developed is called LLaMA2.

If you were paying attention when you watched the LLM video, you heard the term 'Transformers.' It's now time to meet the real superstars within Generative AI.

➡️ Transformers

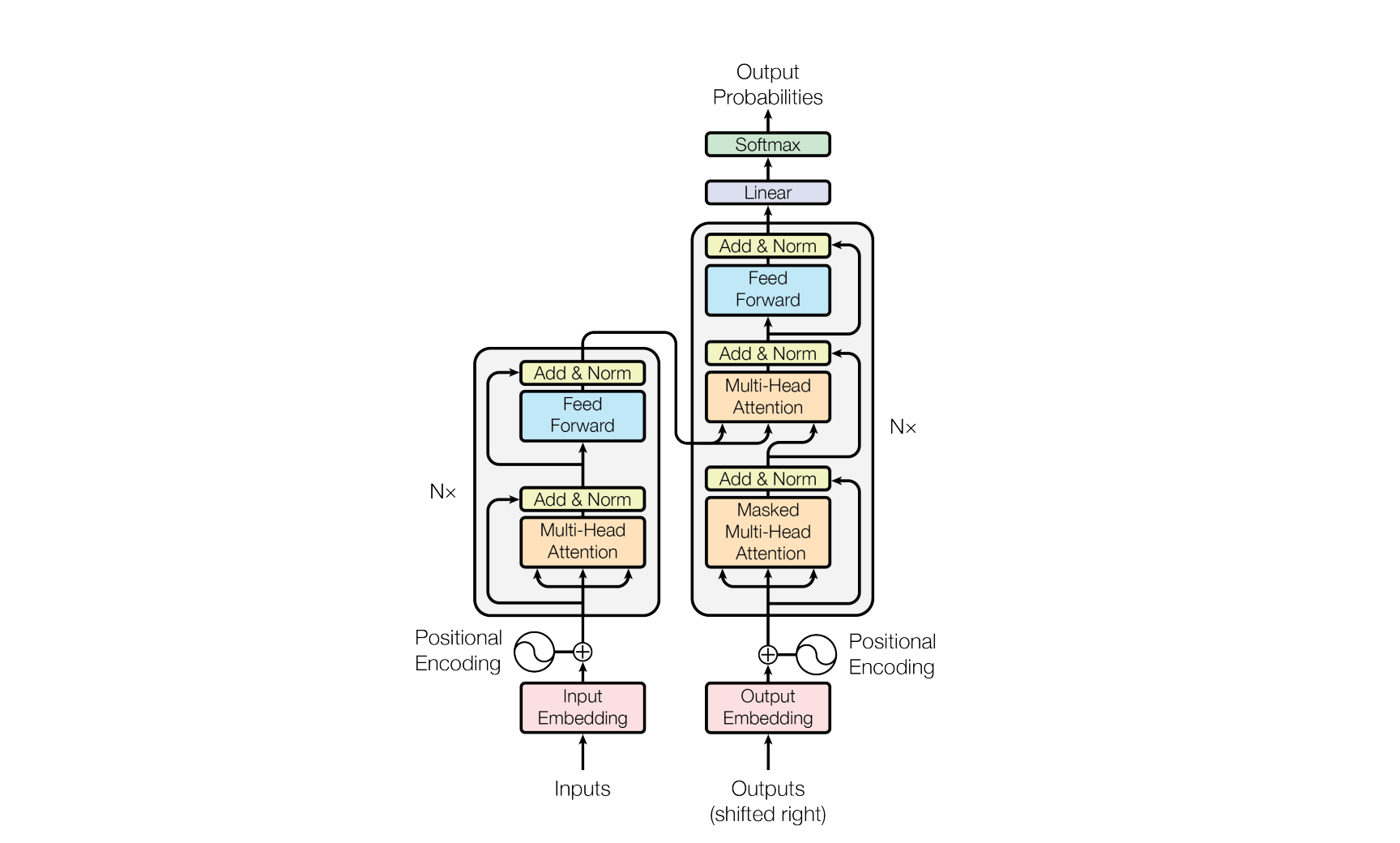

Transformers is the name of a specific model or method within deep learning that is used as a building block in much of what has to do with Generative AI.

Transformers are considered a key, if not THE key, component to the new wave of Generative AI' - Quote.

Transformers are one of the main reasons why Foundation Models and LLMs have become so impressive. It is, among other things, Transformers that are used to train GPT-4, which is the LLM for ChatGPT.

'What on earth? What do you mean by models and methods??? I don't get it,' you might be thinking.

In overly simple terms, Transformers are a smarter way to crack the nut of intelligence. Whatever intelligence is & who is really the smartest...

Beyond the Basics:

- Transformers were introduced for the first time by researchers at Google in 2017 in the article Attention Is All You Need. And in the image below, the researchers visualize how a Transformer works. It's all Greek to most people who don't work on developing AI solutions. Now you have at least seen it!

- If you don't want to read a highly technical PDF (the one from Google above) but still want to learn a bit more about Transformers, I can recommend this video from IBM. In it, they delve into advanced topics like Encoders, Decoders, and Attention.

For those of you still reading, well done! Now we just have one last term to clarify.

➡️ Diffusion Models

What Generative AI is becoming increasingly skilled at is generating realistic images. You've probably tried services like DALL·E from OpenAI, Midjourney (my own favorite), or Stable Diffusion.

When it comes to visual AI, it's not just Transformers that have been at play, but also another new AI star that has been developed in parallel, namely Diffusion Models. In the video below, the concept is explained at 4 different levels, from basic to more advanced.

Beyond the Basics:

- Before the entry of Diffusion Models, other models with names like Generative Adversarial Networks (GANs), Variational Autoencoders (VAE) and Flow-based models were used.

Wohooooo!!! 🦾

There you have it, all the heavy theory covered. Let's quickly recap.

- Generative AI is the umbrella term for a new wave of AI that can create unique content.

- Foundation Models are base models that are trained on a MASSIVE amount of data. And they can be used as a foundation for building new apps and solutions in Generative AI.

- LLMs are a type of Foundation Model that deals with text and numbers.

- Transformers are the ⭐️-model (although there are others) for training Generative AI models on text and numbers.

- Diffusion Models are the ⭐️-model (although there are others) for training Generative AI models on anything related to visual content.

And when we combine all of this, we have the foundation for what makes Generative AI work. We get the cone for the ice cream.

But what about the actual ice cream with all the different flavors? In other words, all the new apps and services that pop up every day that we can use. Well, we'll have to cover that another time (but it could look something like this ⬇️).

After this sugar rush, you might have gained an extra 8 minutes of energy to watch the video below, where Kate Soule from IBM summarizes much of what we've talked about. But you can also skip down to the next heading where the reward awaits.

Probably the best AI presentation of the year 🥳

We've finally reached the end and the reward!

At the end of July, Spotify released an AMAZINGLY good and educational video where Gustav Söderström – Co-President, CPO & CTO at Spotify – gives a lecture on AI at an internal event. Fortunately for all of us, it has also been made public as both a Video-podcast (Spotify) and on YouTube!

If there's anything on the topic of AI that I can recommend watching, it's this. The presentation has received a lot of praise, so you may have already seen it.

In Gustav's presentation, you'll recognize and learn more about the concepts that we have covered in the text above. So settle in front of any screen and enjoy a 1.5-hour educational presentation 🍿, and come out on the other side as an upgraded 2023 human.

Additional Tips

For those of you who want to dive deeper into the world of Generative AI, I can recommend the following:

- The Future Of Generative AI Beyond ChatGPT, Forbes

- What’s the Future for A.I.?, The New York Times

- How Generative AI Is Changing Creative Work, Harvard Business Review

- What is generative AI?, McKinsey & Company

- AI-paradoxen – både vårt största hopp och ett potentiellt megahot (written by me in Swedish)

- AI ger oss innovation med superkrafter. Vad vi kan förvänta oss 2023 och framåt (written by me in Swedish)

// Judith

Don't miss to subscribe to my newsletter 'Plötsligt i Framtiden' on SubStack (in Swedish though), where I write articles once a week on the topics of technology, sustainability, and the future.